The number of misconceptions in the tech world can be overwhelming, but few are more frustrating than those surrounding low-resolution CPU benchmarks. We think those take the cake, but we’ll admit there are plenty of great, we mean frustrating myths to choose from. Today, however, we’re focusing on low-resolution testing – for the third time.

The first time we addressed this topic, at least in recent times, was almost two years ago. While that discussion is still relevant and effectively covers the subject, it appears to have missed the mark for some. The number of people commenting on all 9800X3D reviews, insisting that testing at 1080p is outdated, unrealistic, or misleading, remains disappointingly high.

So, we’re revisiting the subject once more, and we hope the examples and explanations we’re providing this time will help clarify things. To be fair, we have received plenty of positive feedback on previous articles from readers thanking us for addressing this topic. Many have said it helped them better understand why low-resolution, CPU-limited testing is the best and truly only way to assess the gaming performance of CPUs. This support is encouraging.

We also recognize that the resistance comes primarily from a vocal minority who may not fully understand the subject or may be misunderstanding what a CPU review is meant to convey. Most readers don’t need this explanation, but we believe there’s some valuable data here that will interest those who are already familiar with the subject. Before we dive into the benchmark graphs, let’s set the stage with a few preliminary insights.

The Casual 60 FPS Gamer

First, what kind of gamer are you, and what level of performance do you actually need to enjoy your games? Do you only require 60 fps or less? If so, CPU performance is unlikely to be a significant factor for you. We’d consider this type of experience to be more casual gaming, and that’s perfectly fine.

Casual gaming simply differs from competitive gaming, which generally demands well over 100 fps in games like Counter-Strike 2, for example. High-refresh-rate gaming, though also possible in a casual setting, is more of a high-end experience.

What isn’t fine is imposing a single viewpoint on everyone. While it might seem that low-resolution benchmarking enforces a particular standard, it actually provides the full picture – the entire spectrum of gaming performance.

Not everyone is happy with 60 fps, and for some, even 100 fps isn’t enough. There are games where increasing from 200 fps to 300 fps, or even higher, genuinely impacts the experience. If you don’t believe that or find it difficult to accept, it may reflect more about your personal gaming experience, play style, or even personality – but we’re not here to analyze that, so we won’t go further down that path.

To be clear, gaming at 30 fps, 60 fps, or any frame rate that suits you is perfectly fine. You’re welcome to enjoy games at whatever frame rate you prefer. Just be aware that many PC gamers aren’t content with 30 fps or even 60 fps; in fact, many feel most comfortable gaming well above 100 fps. This is especially true for competitive shooters, where lower frame rates can make it harder to perform well and can place players at a disadvantage.

So, if you’re only looking for, say, 60 fps at 4K, be aware that most CPU reviews aren’t necessarily tailored just for you, and in fact, high-end CPU reviews probably don’t apply to you at all. Of course, we’d love for you to check out these reviews and encourage you to do so, but realistically, the information presented – regardless of the resolution tested – might not offer much practical value, at least in the near term.

“We Want Real-World Results”

We often hear arguments like, “I just want to see 1440p and 4K results because they’re more ‘real-world’; they let me know if a CPU upgrade will give me any additional performance.”

The problem with this is, we don’t know what games you’re playing right now or the games you’ll be playing in the future. While we can’t predict exactly how future games will perform on current hardware, it’s safe to assume they’ll be more demanding than today’s games and will therefore place greater demands on your CPU.

We also don’t know what settings you’ll be using, as this depends largely on the minimum frame rate you prefer for gaming. Typically, single-player gamers and some multiplayer gamers use the highest quality settings they can manage while still meeting their minimum acceptable frame rate. We know this to be true from polling over 100,000 people in the past, and it’s also a logical assumption.

Testing a modern AAA title at 4K with quality settings that result in a GPU-imposed limit at 60 fps or lower isn’t helpful if you prefer gaming at 90 fps or more. Moreover, if you’re content with 60 fps or lower, high-end CPUs likely aren’t necessary for your setup, and reviews of these CPUs won’t be particularly relevant to your needs.

You Can’t Toggle CPU Performance

A key reason for determining a CPU’s true gaming performance through low-resolution, CPU-limited gaming tests is that CPU performance doesn’t scale in the same way as GPU performance.

For instance, if you’re gaming with an RTX 4070 and want to increase your frame rate in a game like Space Marine 2, there are numerous ways to achieve this, provided you aren’t CPU-limited. You can lower the resolution, enable upscaling, or adjust various visual quality settings – any of these changes can significantly boost your fps.

However, if your CPU can only handle 80 fps in Space Marine 2, then 80 fps is your maximum. Lowering the resolution, enabling upscaling, or turning off features won’t usually increase your fps beyond 80 in this case. It’s rare to find a setting that significantly affects CPU performance. Some games allow you to adjust the number of NPCs, which can impact CPU load, but even this rarely results in a substantial performance change.

In contrast to GPU bottlenecks, which can almost always be alleviated by compromising on visuals, there’s little you can do when you hit a CPU bottleneck – you’ve essentially reached a hard limit.

Reviews Aren’t Evil Temptations

Therefore when checking reviews for your next CPU upgrade, it’s important to determine how CPU A really performs relative to CPU B, allowing you to make an informed purchase.

Moreover, the purpose of a review isn’t to sell you a new processor or even upsell you on a processor. The 9800X3D is a seriously impressive gaming CPU, but if you already own a high-end CPU such as the 14900K or 7800X3D, you shouldn’t feel pressured into upgrading, as you still have a seriously capable CPU. In fact, CPU reviews shouldn’t be viewed as incentives to upgrade; that’s not their purpose.

Instead, focus on monitoring your system’s performance during gaming. First, ask yourself if the system is performing at a level you’re happy with. If it is, there’s no need for any changes. If you want additional performance, identify what’s limiting you – the CPU or GPU.

Monitoring GPU usage is the easiest way to determine this: if GPU usage drops below around 95%, you are likely CPU-limited. If it’s well below that you’re not getting anywhere near the full value out of your GPU, which is likely the most expensive component in your system.

Cost Per Frame

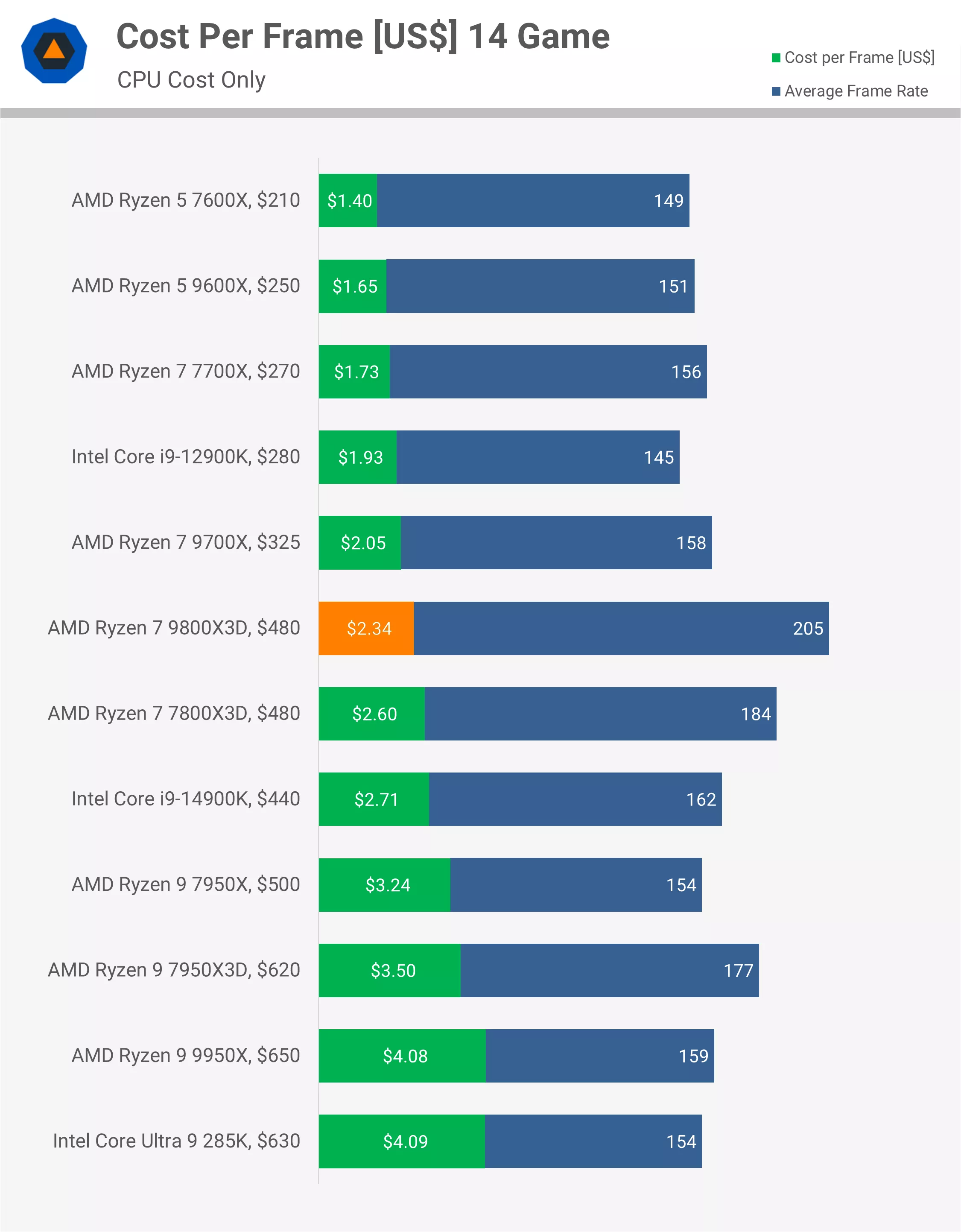

If you do need a CPU upgrade, reviews and benchmarking is the best way to determine what you should buy, especially when low-resolution testing is involved.

Low-resolution testing reveals the CPU’s true performance, helping you make informed “cost per frame” comparisons. For example, our 9800X3D review acknowledges that AMD’s latest 3D V-Cache CPU is now the world’s fastest gaming CPU, though it isn’t the best value for gaming.

That distinction goes to CPUs like the Ryzen 5 7500F, 7600, or 7600X, as shown through data from the 7600X. Similarly, the 9600X, 7700X, and 9700X offer better value than the 9800X3D at various performance levels.

So, even if you think low-resolution testing doesn’t reflect real-world conditions or might steer you toward a CPU you don’t need, these arguments fall flat when you consider the cost-per-frame value of these components in direct comparisons.

There is a Reason Why Almost All Reviews Focus on Low-res Testing

As mentioned earlier, we recognize that most of you understand this, though a vocal minority may continue to resist low-resolution testing. Our goal is to clarify things for newcomers who may feel genuinely confused.

For those who still disagree, have you considered why every credible source and trusted industry expert relies heavily on low-resolution gaming benchmarks when evaluating CPU gaming performance? There isn’t a single respected source that doesn’t. While some reviewers include 1440p and 4K benchmarks, they do not emphasize them.

We stopped including those tests after initially caving to demand, realizing they can be misleading and offer little value. We’ve phased out 1440p and 4K testing from most CPU reviews for these reasons – and we’ll show you exactly why in a moment – though we may include it where it makes sense, for example for GPU/CPU scaling testing.

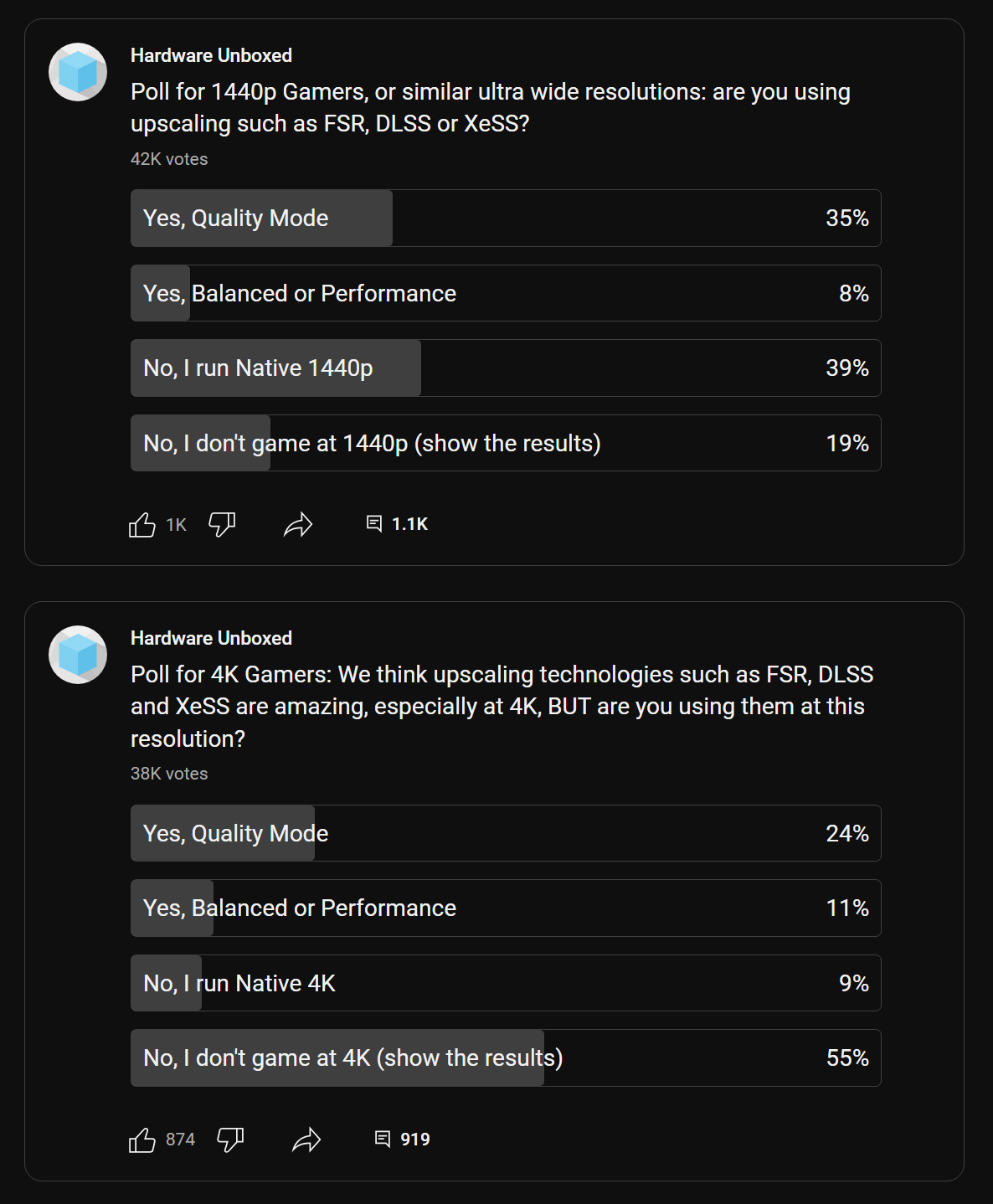

Finally, before we get into the benchmarks, let’s briefly mention the real-world relevance of 4K CPU benchmarking, or even 4K benchmarking in general. While 4K resolution is quite useful for assessing GPU performance, many gamers who play at 4K don’t use native resolution. Instead, they enable FSR, DLSS, or XeSS, opting for either the Performance, Balanced, or Quality preset.

A recent HUB poll with over 35,000 participants found that about half of gamers use upscaling, so we’ve decided to include some 4K DLSS Balanced results. There’s a lot to cover, so let’s start by comparing the Core Ultra 9 285K, Ryzen 7 7700X, and Ryzen 7 9800X3D, all using the RTX 4090.

Benchmarks

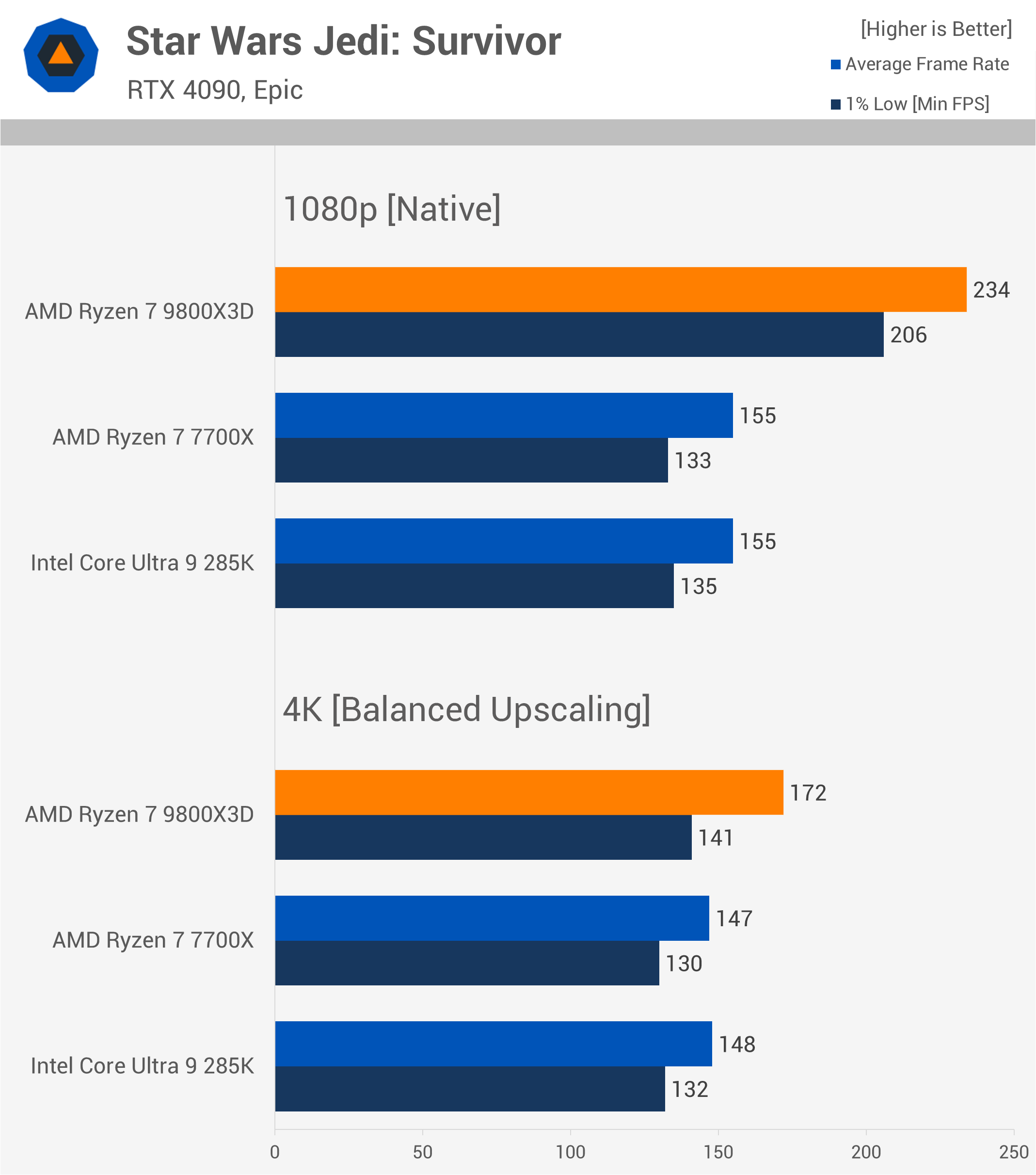

Star Wars Jedi: Survivor

First, we have Star Wars Jedi: Survivor. At 1080p, the 285K and 7700X perform closely, while the 9800X3D is brutally fast allowing for 234 fps, making it 51% faster. Now, you do not need 234 fps on average to enjoy this game, and in fact, 155 fps on average is plenty, so the 285K and 7700X are adequate here.

What this data tells us is that when placed under a heavy gaming load the 9800X3D can deliver 51% more performance, which is a substantial increase, and while not particularly useful in this example, it almost certainly will be in the future. So if you’re primarily gaming, you want to know this information before spending $630 on the 285K, opposed to what should be $480 for the 9800X3D.

Now, if we were to test at 4K with DLSS Balanced upscaling enabled, we’d still find that the 9800X3D is indeed faster than the 285K and 7700X, though now by just 16%. So there’s still a performance benefit even under ‘real-world’ conditions. That said, everything we learned from the 1080p data remains true: the 285K and 7700X are more than fast enough in this title, both comfortably delivering a high refresh rate experience.

This also doesn’t necessarily tell you how a more affordable GPU, such as the RTX 4070, would perform, since we’re using the maximum or Epic quality preset. If, for whatever reason, you needed higher frame rates, you could simply downgrade the quality preset to High or even Medium, which would massively boost performance – assuming you have the CPU headroom.

So, once again, while GPU performance scales massively with quality settings, CPU performance does not, at least not to the same extent.

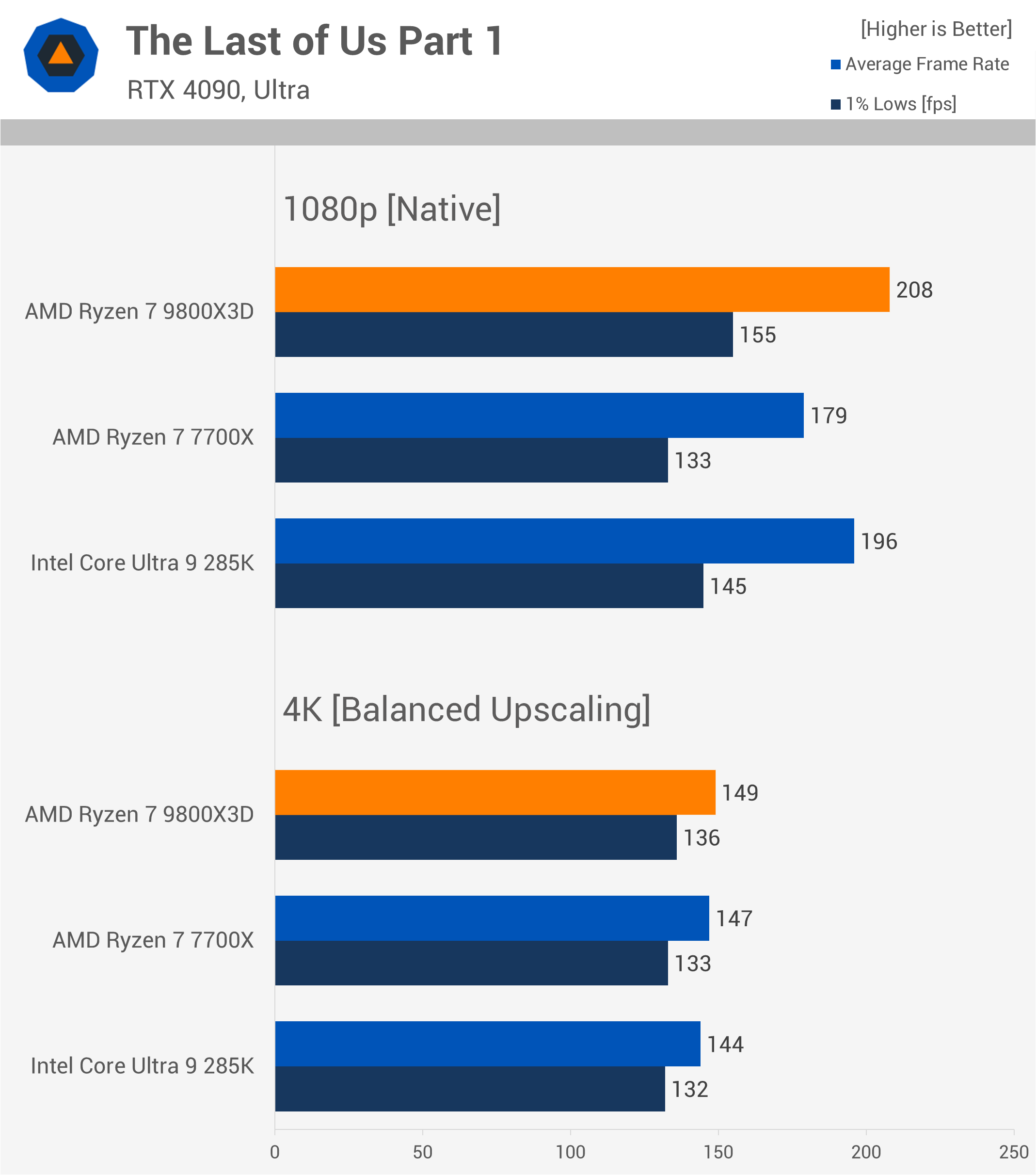

The Last of Us Part 1

The results in The Last of Us Part 1 are what many who criticize low-resolution CPU testing might want to see. At 1080p, the 9800X3D is 16% faster than the 7700X, but at 4K with upscaling, it’s only 1.4% faster, which might make the 9800X3D seem pointless for 4K gamers.

This is both true and untrue – it’s ultimately too broad a statement, painting all gamers with the same brush. Many AAA games are used more as benchmarking tools rather than precise performance guides for each specific game, though they can serve both purposes.

In this case, with the CPUs we’re looking at, the actual fps numbers become less relevant. You don’t need over 200 fps to play and enjoy The Last of Us Part 1. Most gamers, ourselves included, would be thrilled with 140 fps, and all three CPUs can deliver that level of performance, as seen at 1080p. Given this, introducing a GPU bottleneck just reveals the bleeding obvious.

Yes, it’s worth noting that upgrading from a 7700X to a 9800X3D likely won’t deliver 16% more performance in The Last of Us Part 1, but that should be clear – the 7700X averages 179 fps at 1080p. So if you’re content with 140 fps, 100 fps, or anything below the 1080p numbers, the data will reflect a GPU limitation, not a CPU one.

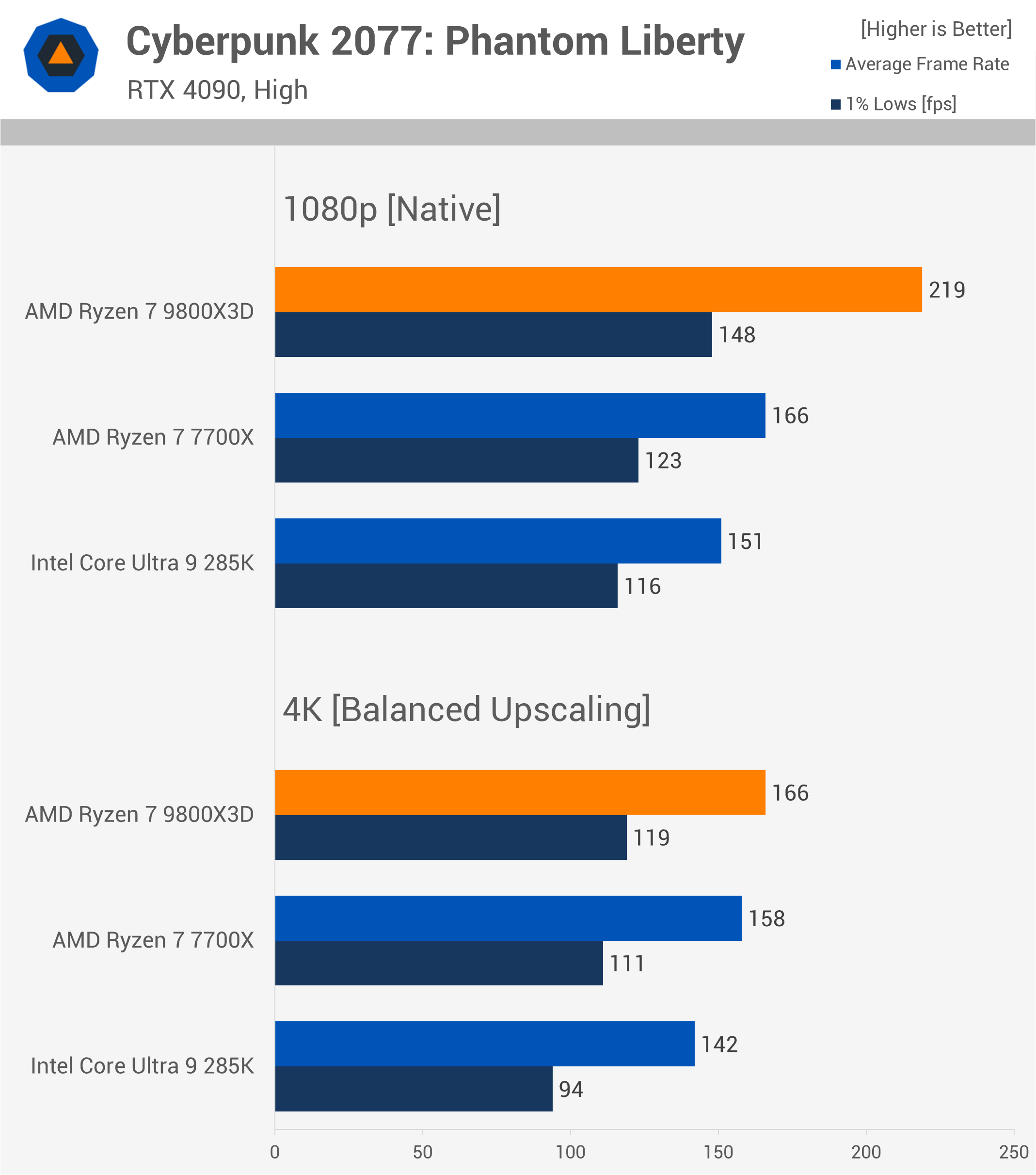

Cyberpunk 2077: Phantom Liberty

A similar story is seen in Cyberpunk 2077: Phantom Liberty: the 9800X3D is 32% faster than the 7700X at 1080p but only 5% faster at 4K with upscaling. It’s still 17% faster than the 285K, which is significant, though if you’re happy with an average of 140 fps, the 285K will suffice – though that still makes it a poor value choice for gaming.

Here, Cyberpunk 2077 serves more as a tool to gauge the true gaming capabilities of these CPUs, providing insight into how they will age and which will fare best over time. But unless you need over 200 fps, the 9800X3D isn’t essential for this game.

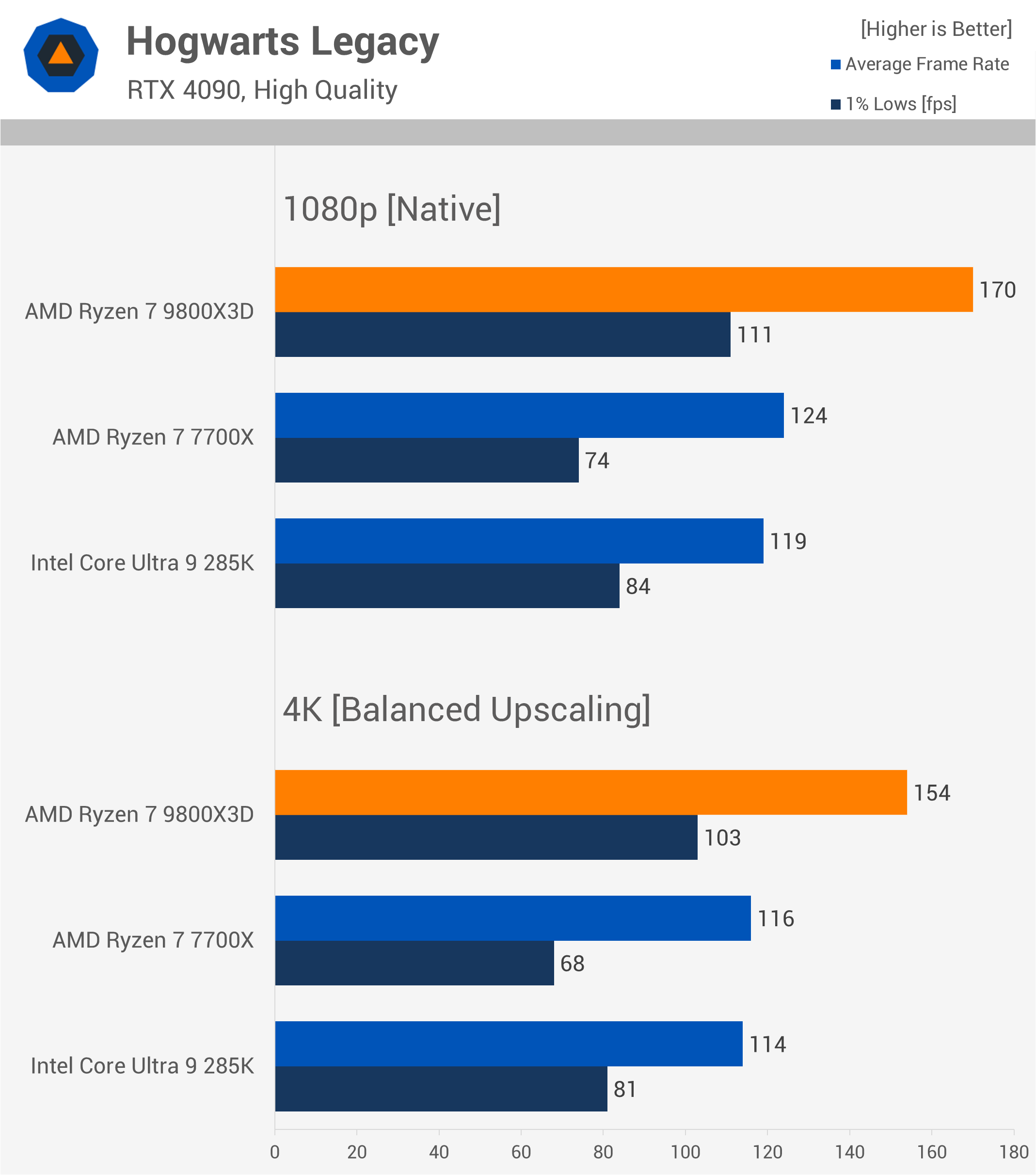

Hogwarts Legacy

A game where the 4K argument completely falls apart is Hogwarts Legacy, which is incredibly CPU-demanding. At 1080p, the 9800X3D is 37% faster than the 7700X and 43% faster than the 285K. Increasing the resolution to 4K with upscaling enabled, the 9800X3D still holds a 33% lead over the 7700X and a 35% lead over the 285K, so still massive margins.

These are not only significant but can be game-changing margins for those who prioritize a high-refresh-rate experience. However, we’re using the High preset here; if you were to enable Ultra, the performance margins would narrow, along with the frame rate.

It all comes back to the casual vs. competitive frame rate discussion: if you’re content with 60 fps and all visual settings maxed out, including ray tracing, CPU performance within reason doesn’t really matter – you certainly don’t need the 9800X3D. But as a tool to measure CPU performance under heavy gaming loads, it’s clear the 9800X3D is superior, providing the best gaming experience, even at 4K with the right settings like High preset and Balanced upscaling.

Assetto Corsa Competizione

For heavily CPU-limited games like Assetto Corsa Competizione (ACC), the tested resolution doesn’t matter much. Here, the 9800X3D is over 70% faster than the 7700X and 285K at 1080p, and still more than 60% faster at 4K with DLSS upscaling.

In this case, using higher resolutions doesn’t change much, making it a solid choice for competitive settings. While ACC may not demand high frame rates like a shooter, ACC players often value fps performance, and this is a useful tool to assess CPU gaming performance.

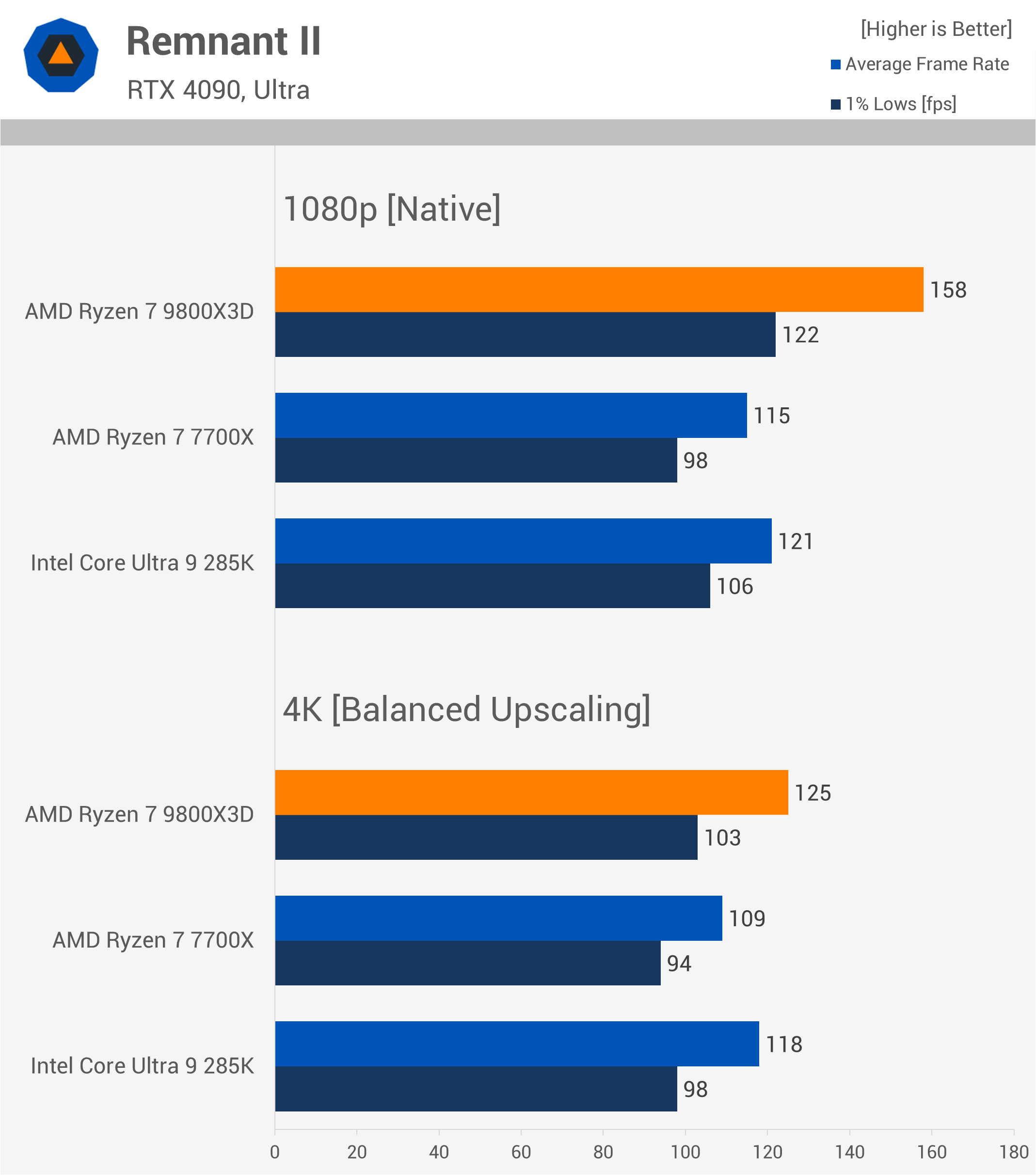

Remnant II

The Remnant II data is similar to what we observed in Cyberpunk and The Last of Us Part 1, though the 9800X3D still offers additional performance at 4K despite running into GPU limits. If 125 fps isn’t enough and you want 140 fps or more, the 9800X3D will allow your GPU to hit that target with the right quality settings, reaching up to 158 fps, whereas the 285K and 7700X simply can’t reach that high.

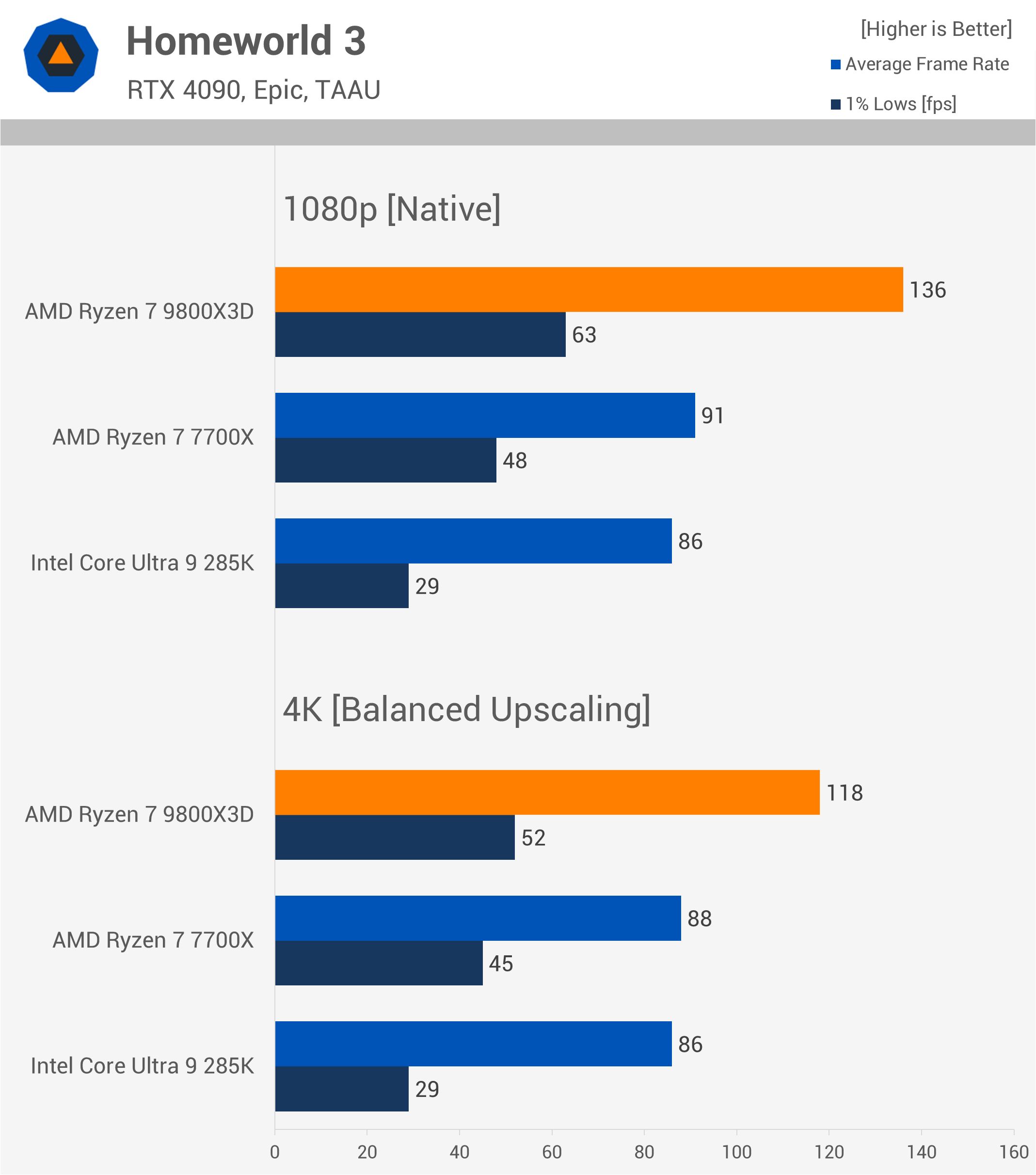

Homeworld 3

In CPU-limited games like Homeworld 3, even at 4K, the 9800X3D is still 34% faster than the 7700X, although the margin would be smaller without upscaling.

This is another example that challenges the notion that CPUs don’t matter at 1440p and 4K – they can, and in a meaningful way. While you don’t need extremely high frame rates for Homeworld 3, playing at over 100 fps rather than below it makes panning and unit control smoother.

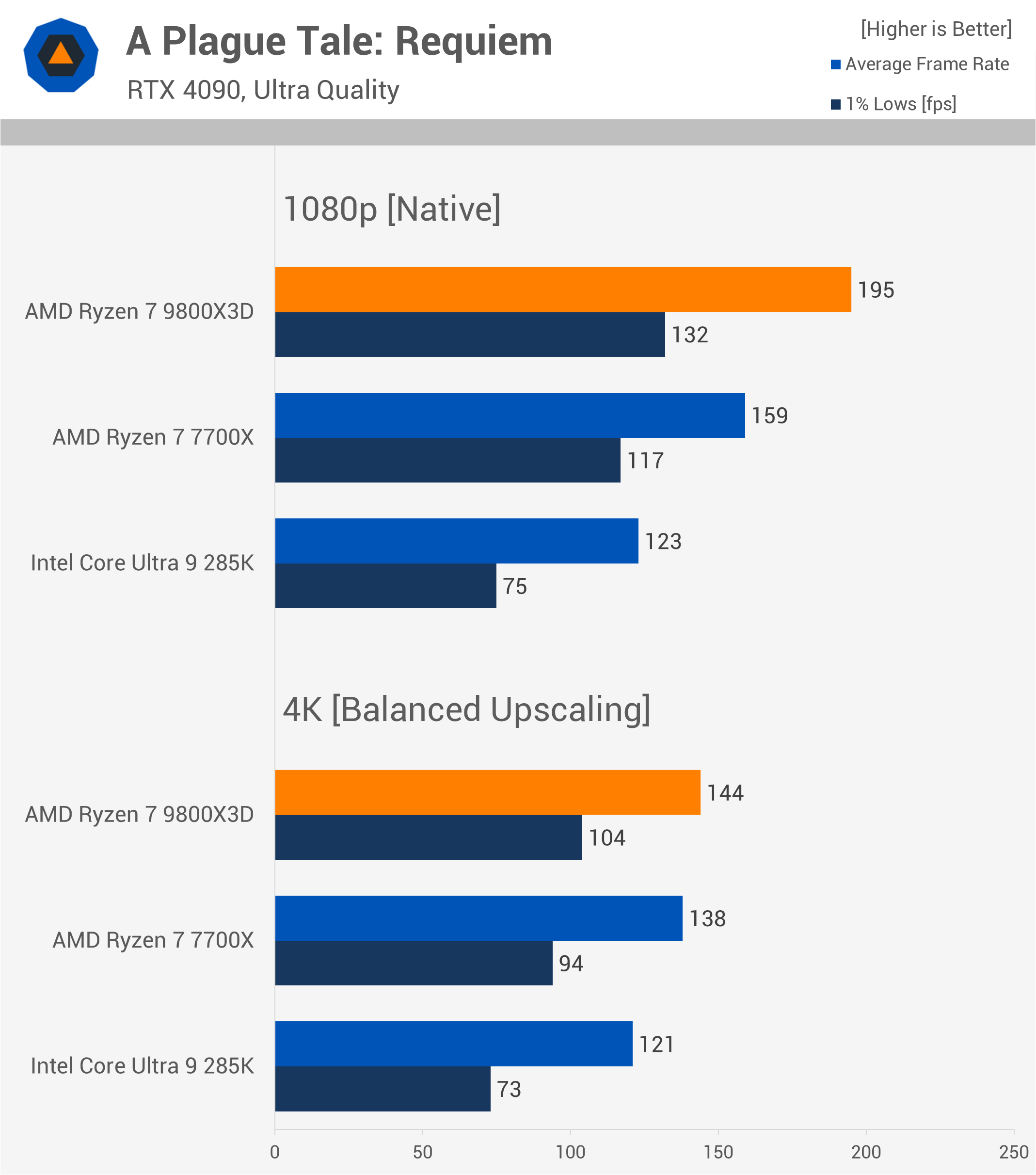

A Plague Tale: Requiem

The results for A Plague Tale: Requiem align with those in Cyberpunk: significant performance margins at 1080p, but smaller at 4K. This game is easily playable on the 7700X, with 159 fps on average being more than sufficient.

However, the 285K falls short here for a high-end product, managing 120 fps with concerning 1% lows. The 9800X3D, on the other hand, still delivers up to 42% better performance at 4K, which is impressive.

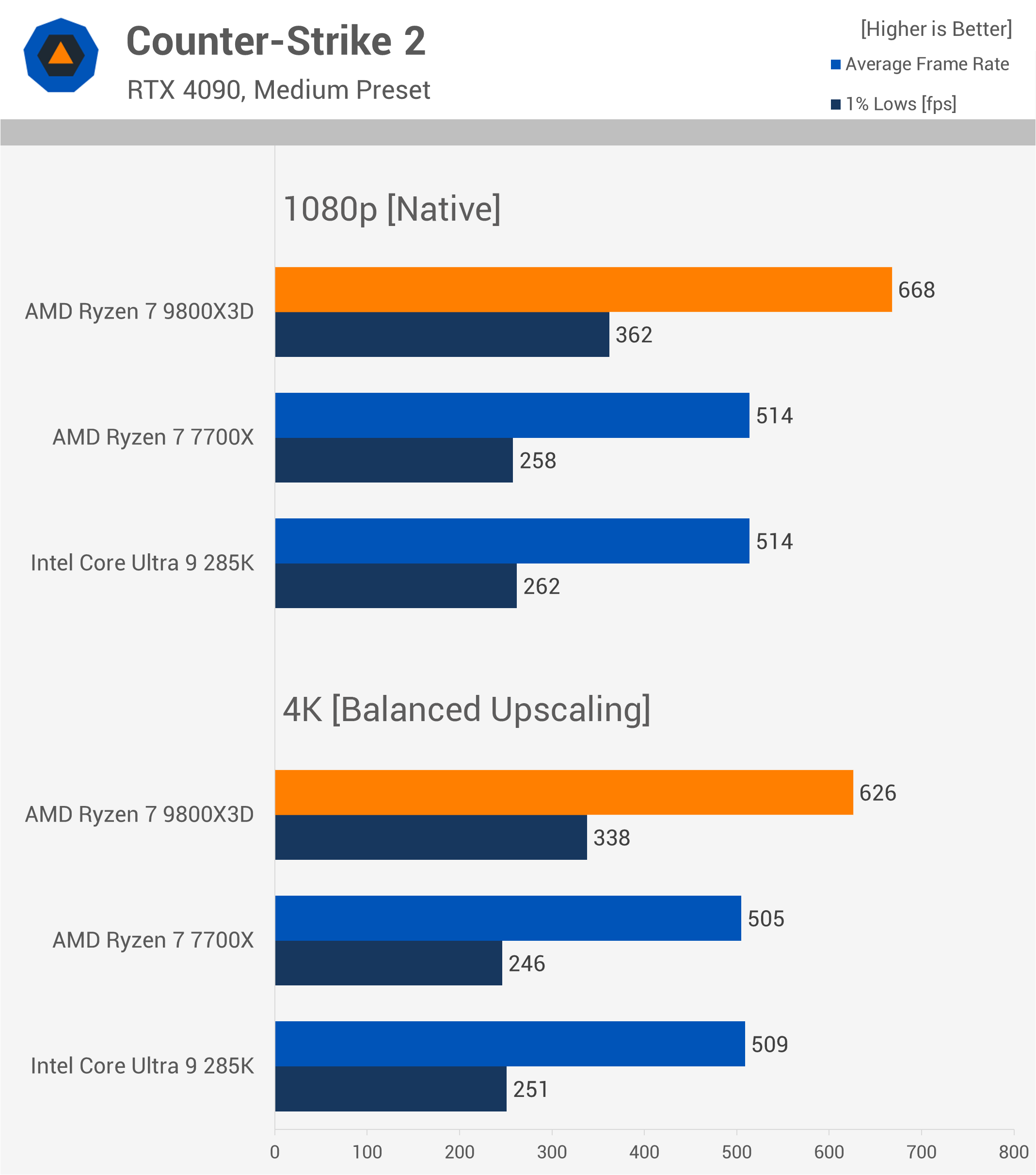

Counter-Strike 2

Our first true esports and competitive shooter example is Counter-Strike 2. This game is featured in our day-one reviews to represent competitive shooters like Valorant, Apex Legends, Fortnite, Warzone and so on.

In these games, players dial down graphical settings to medium or low, not just for higher fps but also for a competitive advantage in spotting and reacting to opponents. This is a common approach, not limited to pros – it’s something many multiplayer gamers adopt to enhance their gameplay.

This isn’t just a thing pro gamers do, which is a misconception more casual gamers who don’t play or often play competitive shooters have. It’s certainly not something just the top 1% of the competitive games do, this is something a large number of multiplayer games do, and it’s something all lean into as they try and improve their skill level. Consequently, players of these games often find themselves CPU-limited.

While one could argue that 500 fps is absurd, and the 24% jump from 505 fps on the 7700X to 626 fps on the 9800X3D at 4K is unnecessary, Counter-Strike 2 players are going bonkers over the 9800X3D. Our review data has spread across CS2 communities, and everyone seems very excited by the performance uplift we were showing.

As a side note, testing 540Hz gaming monitors at Computex this year was a mind-blowing experience – the difference between 240Hz and 540Hz is significant for fast-paced shooters. It’s a reminder that there are different levels of gaming experiences; one isn’t necessarily better than another – they’re simply different, suited to individual preferences.

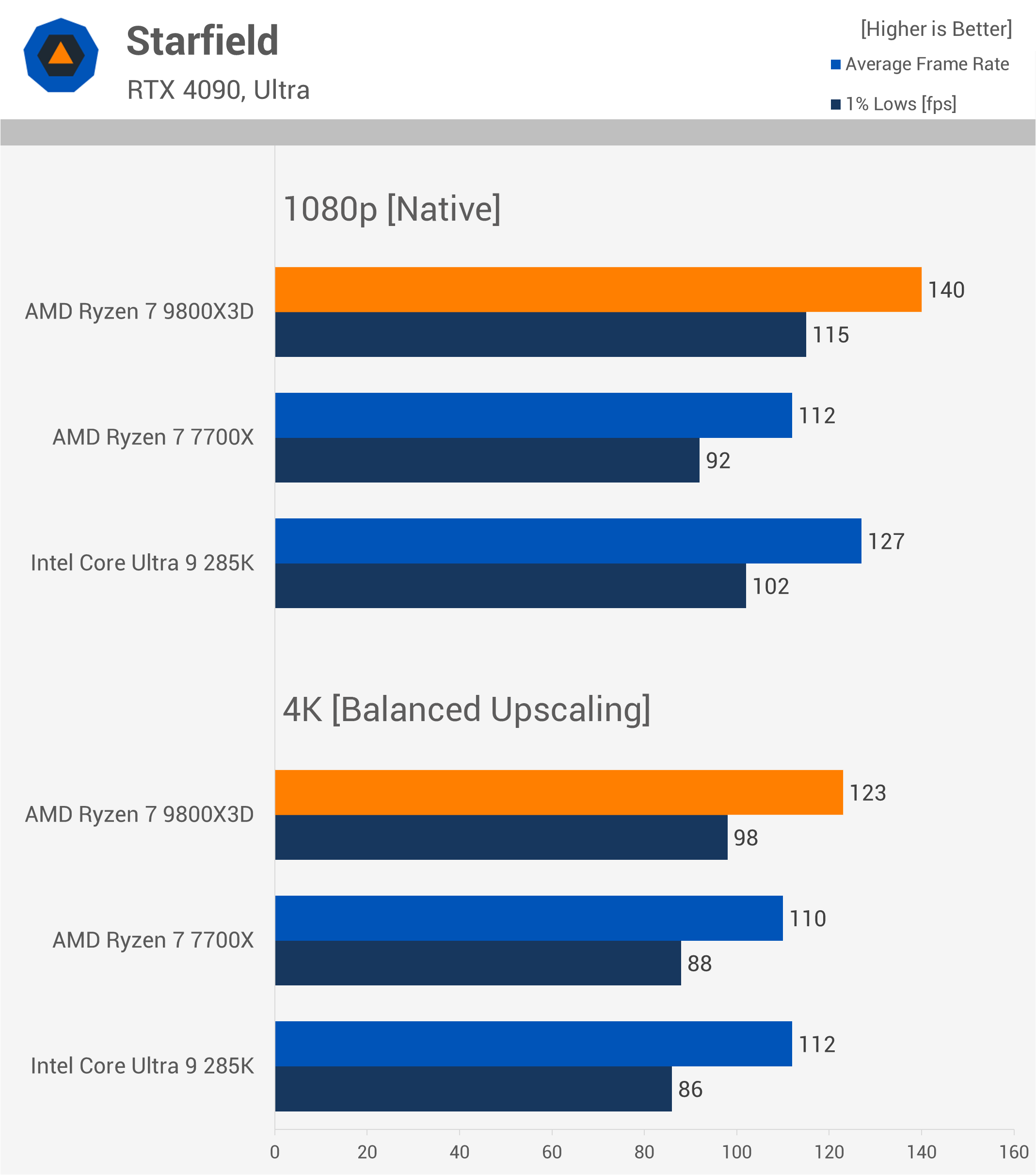

Starfield

Starfield is an extremely CPU-limited game. While the margins narrow at 4K, the 9800X3D still provides a slightly better experience. Additionally, you can lower the quality preset to achieve frame rates around 140 fps – or at least, you can with the 9800X3D, whereas you can’t with the 7700X.

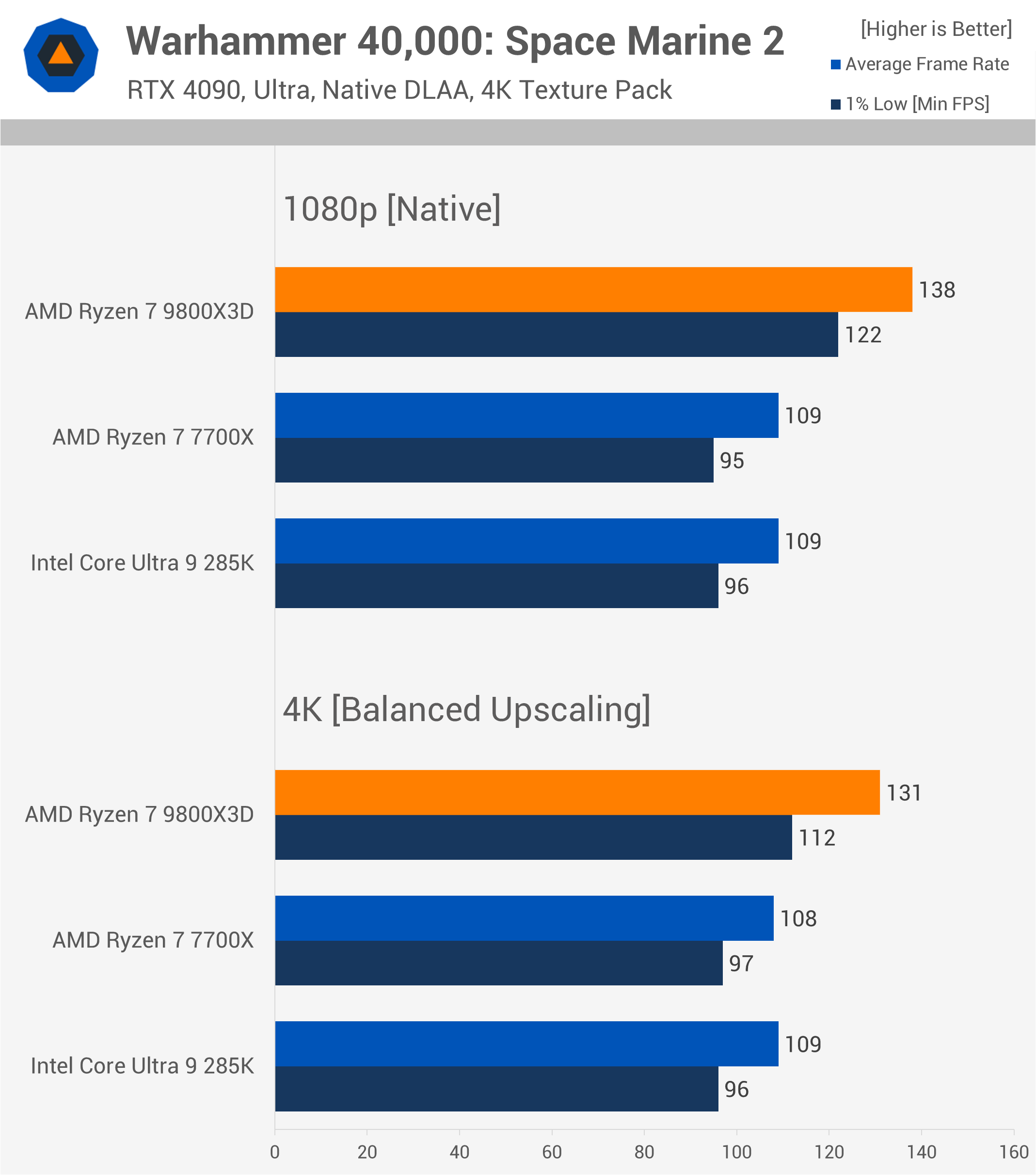

Warhammer 40,000: Space Marine 2

Space Marine 2 is another heavily CPU-limited game, and it’s very popular. Both the 7700X and 285K will limit you to just under 110 fps in more demanding sections, like the large horde battles, which are central to the game.

Even at 4K, the 9800X3D delivers 21% better performance than the 7700X, resulting in a noticeably smoother experience with an average of 131 fps. While the game is perfectly playable at 100 fps, the 9800X3D offers an elevated experience for those who want the best performance and are willing to pay a premium for it – delivering under real-world conditions.

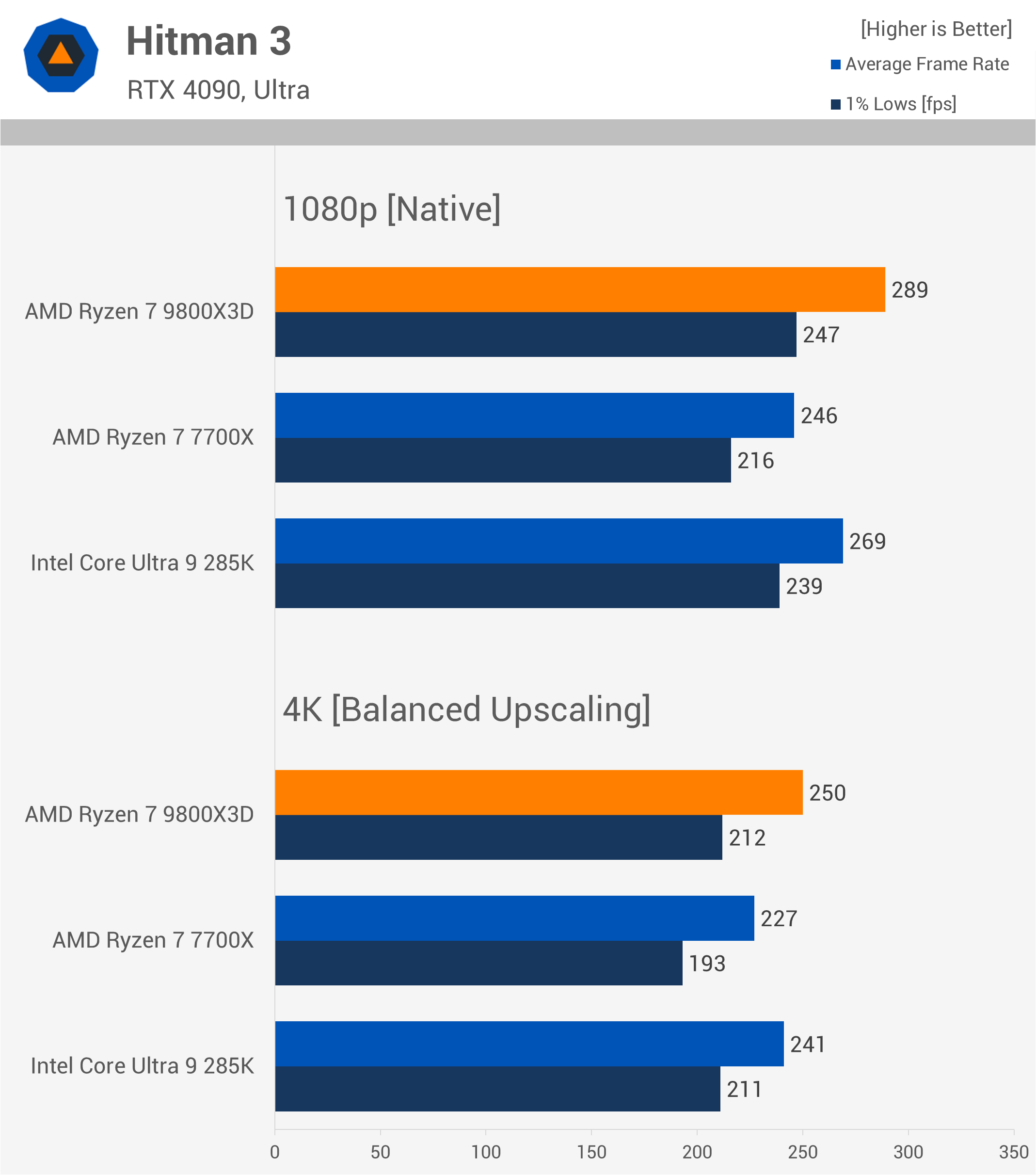

Hitman 3

Hitman 3 is almost four years old, making it one of the oldest games featured in our day-one reviews. The margins aren’t large at 1080p since these modern CPUs aren’t fully utilized. We still see some separation at 4K, but with well over 200 fps, it’s not especially meaningful. We include a few older games, though, to diversify the testing and highlight titles that were demanding during their peak.

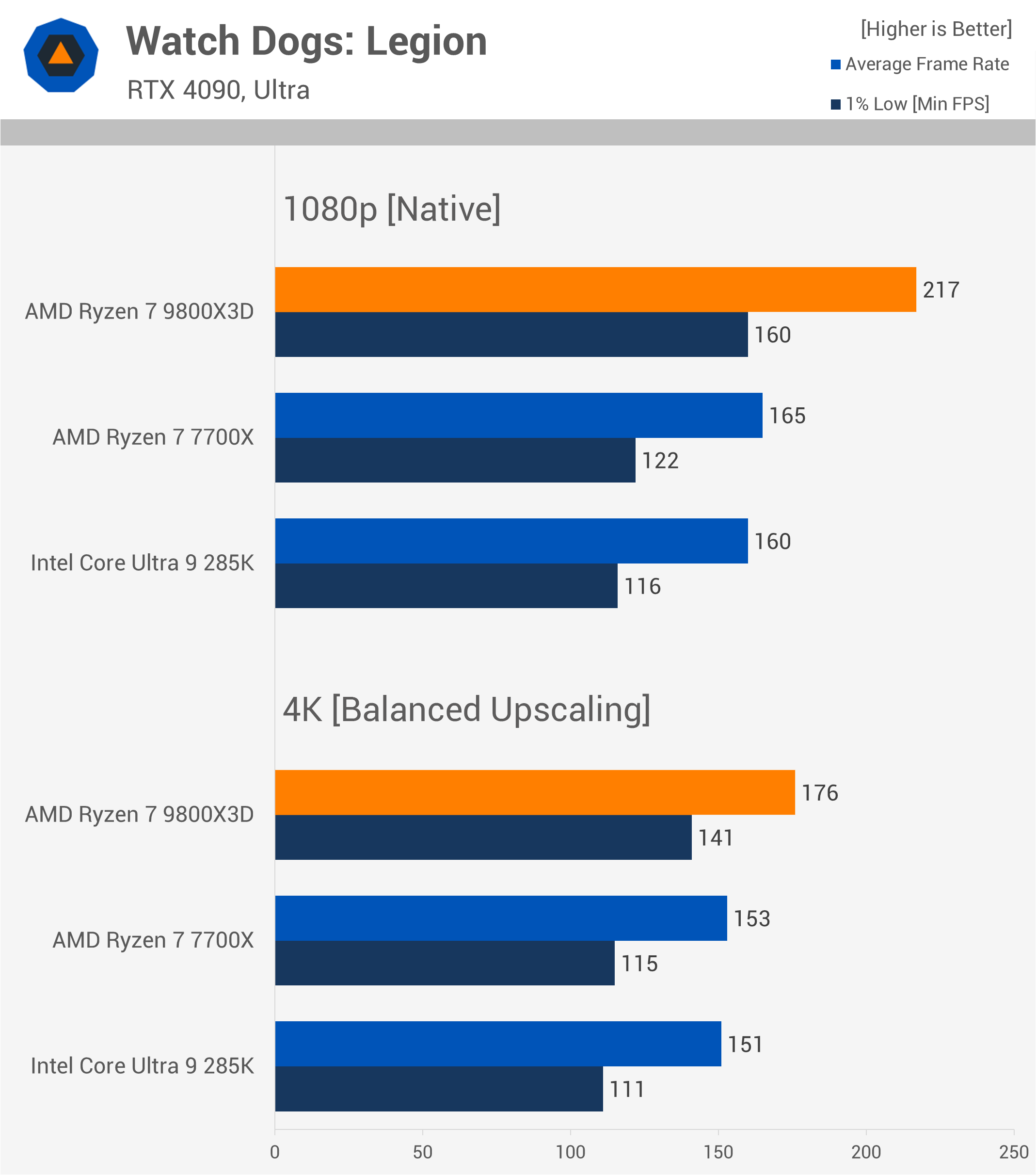

Watch Dogs: Legion

It’s a similar story with Watch Dogs: Legion – although the game remains surprisingly demanding, it’s not enough to challenge modern high-end CPUs, which can easily push over 150 fps.

This title serves more as a benchmark tool, showing the 9800X3D to be up to 32% faster at 1080p and 23% faster at 4K in terms of 1% lows, which is still a significant margin.

Star Wars Outlaws

Then we have Star Wars Outlaws, the only game where the 9800X3D didn’t offer a performance gain at 1080p, and naturally, the same holds true when frame rates are reduced by GPU limits.

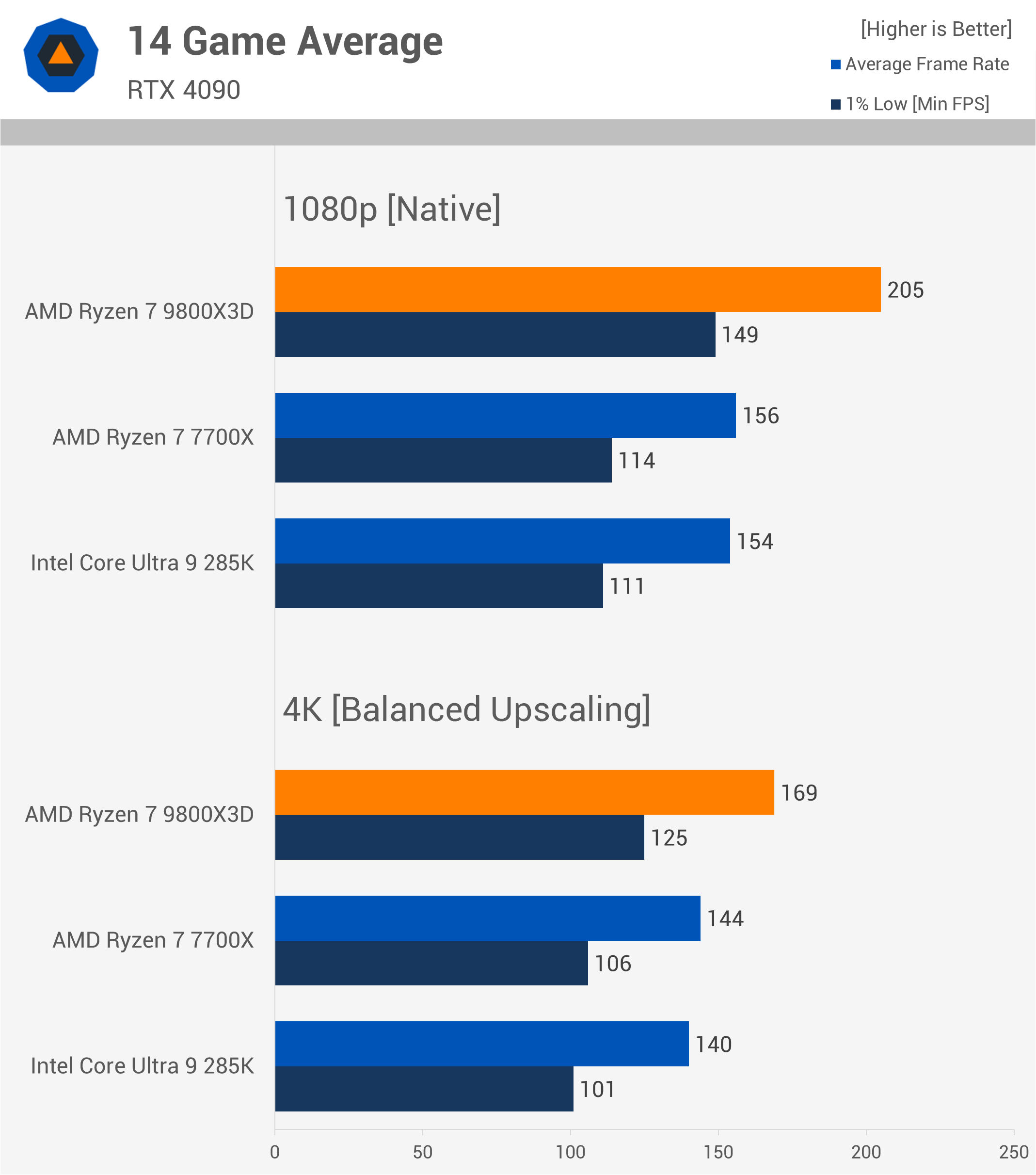

14 Game Average (1080p + 4K)

Here’s a look at the average performance across 14 games. At 1080p, we found the 9800X3D to be around 30% faster than the 7700X and 285K, whereas it was 17-21% faster at 4K using DLSS upscaling. So, still a solid performance gain on average, but as we’ve seen, the relevance of this margin depends on the type of gamer you are.

So we’ve now established that there can be a difference at 4K under realistic conditions, and depending on the game and how you want to play, that difference can either be significant or meaningless.

Some of you may appreciate the 4K data, but ultimately it doesn’t tell us anything that we can’t already learn from the 1080p data. By cross-referencing that information with what a given GPU can achieve at specific resolutions and quality settings, we get a clearer picture. Learning what an RTX 4090 does at 4K with DLSS Balanced and our chosen preset for testing isn’t likely to provide the insights you think it does.

But if we haven’t convinced you yet, let’s wind back the clock to 2022 and compare the Ryzen 9 3950X, Ryzen 7 5800X, and Ryzen 7 5800X3D in three popular games from that time. The 3950X was still available in 2022 and cost more than the 5800X3D, but it had twice as many cores, making it an interesting comparison for the purposes of this article.

CPU Longevity Test: 3950X, 5800X, 5800X3D in 2022 Games

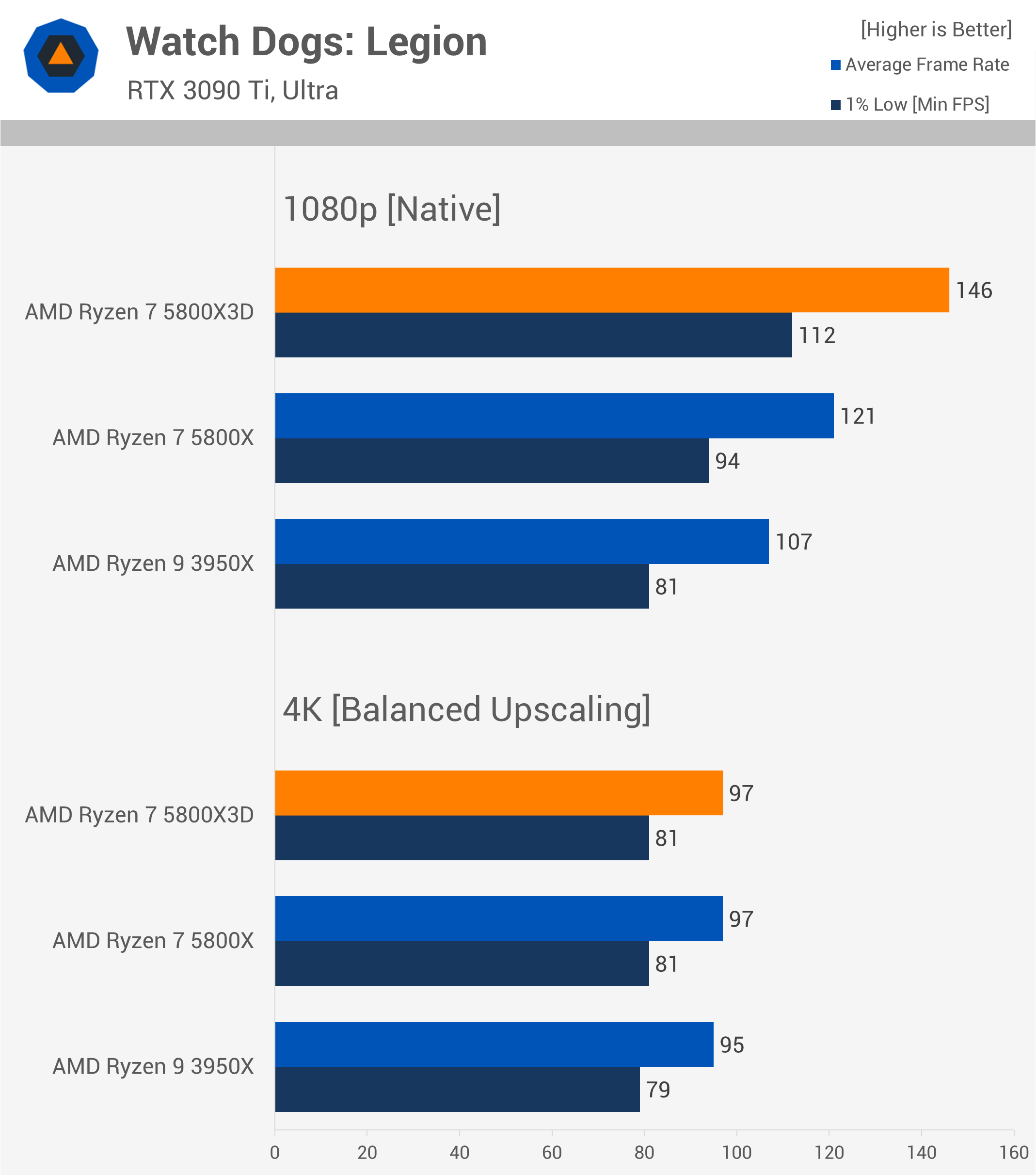

Watch Dogs: Legion

Now, if we compare these CPUs at 1080p using the RTX 3090 Ti, which was the flagship GPU used for testing back in 2022, we find that the 5800X3D is 21% faster than the 5800X and 36% faster than the 3950X. So, if all parts were for some reason retailing for roughly the same price, the clear choice would be the 5800X3D based on this data.

But what if we benchmarked at 4K with DLSS upscaling?

In this scenario, frame rates are capped just shy of 100 fps, as the 3090 Ti can’t go any faster using the Ultra preset under these conditions. Was this data useful back in 2022?

Only if you wanted to play Watch Dogs: Legion at 4K with DLSS and the Ultra preset, using an RTX 3090 Ti, and were content with 97 fps on average. If you wanted 140 fps, you would have lowered the quality preset and ideally chosen the 5800X3D.

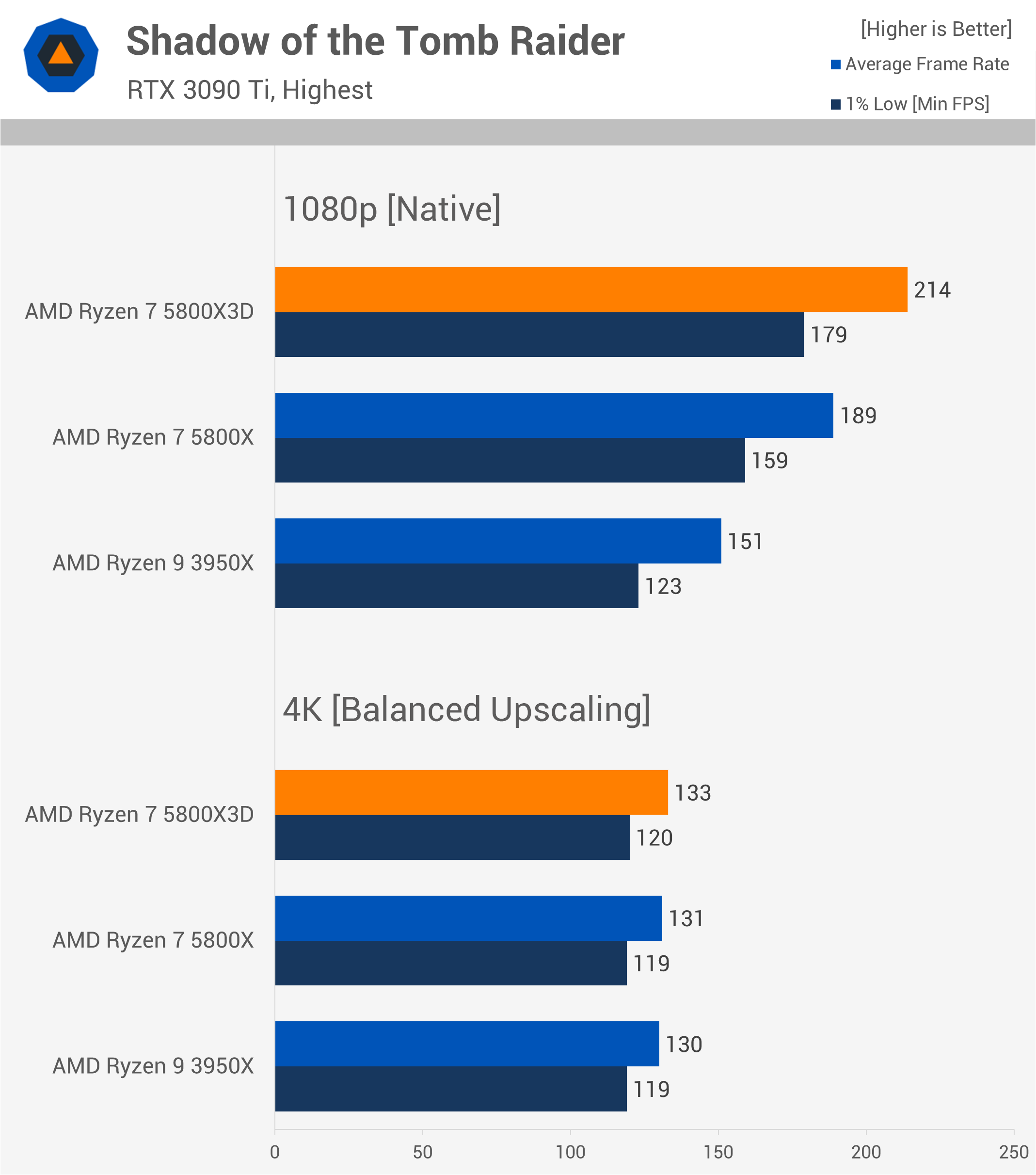

Shadow of the Tomb Raider

It’s a similar story with Shadow of the Tomb Raider, though 130 fps is a high enough frame rate that almost all gamers would find acceptable. However, for evaluating actual CPU performance to choose the best-performing CPU in 2022 and one that would age well, the 4K data is practically useless. While the 5800X3D was 42% faster than the 3950X at 1080p, it was only 2% faster at 4K.

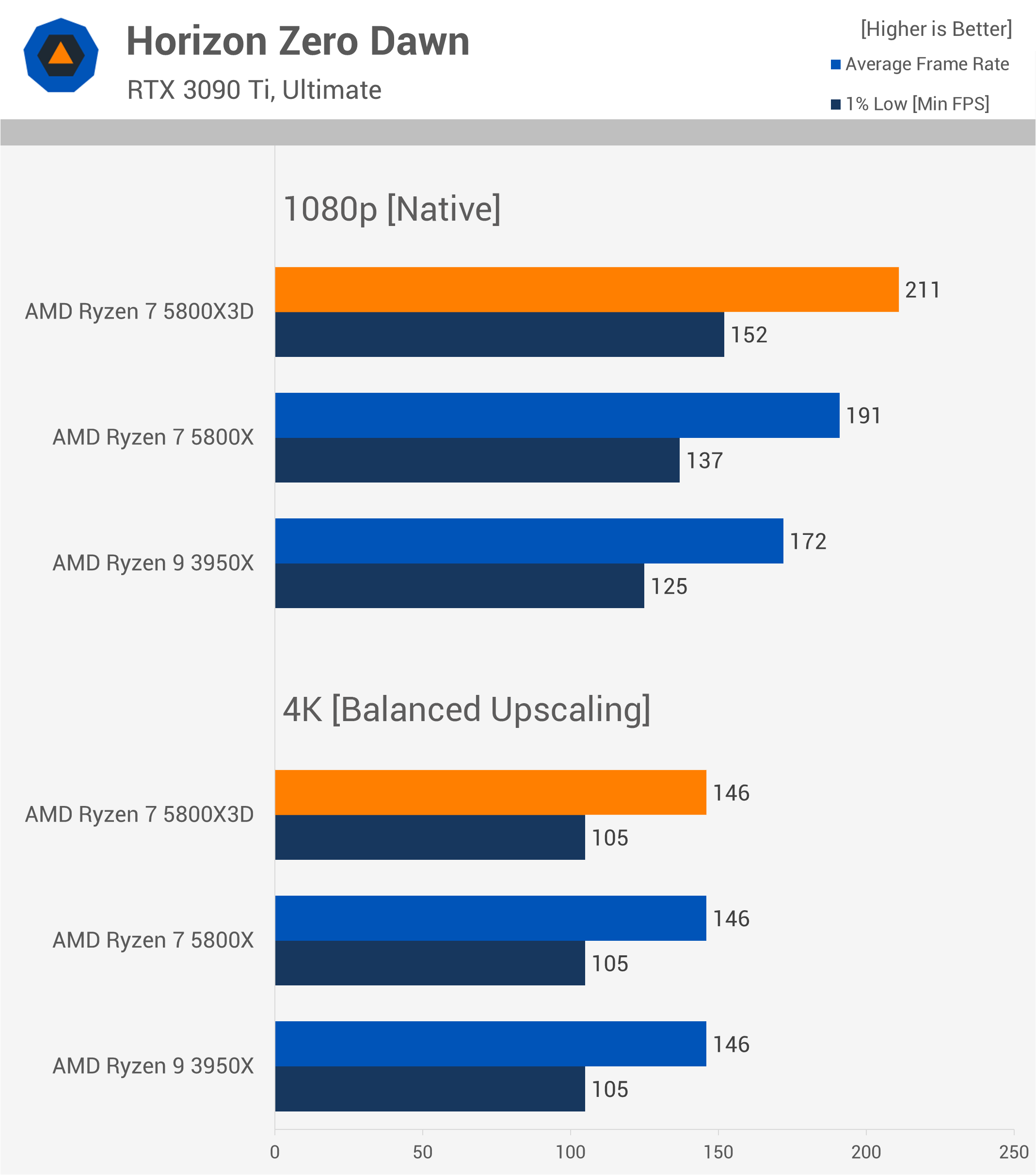

Horizon Zero Dawn

In Horizon Zero Dawn, we see a similar trend. Here, the 3090 Ti reaches 146 fps at 4K, which is ample performance, but with all CPUs capable of pushing over 170 fps in this title, the 4K results are heavily GPU-limited and don’t reveal anything meaningful about actual CPU performance.

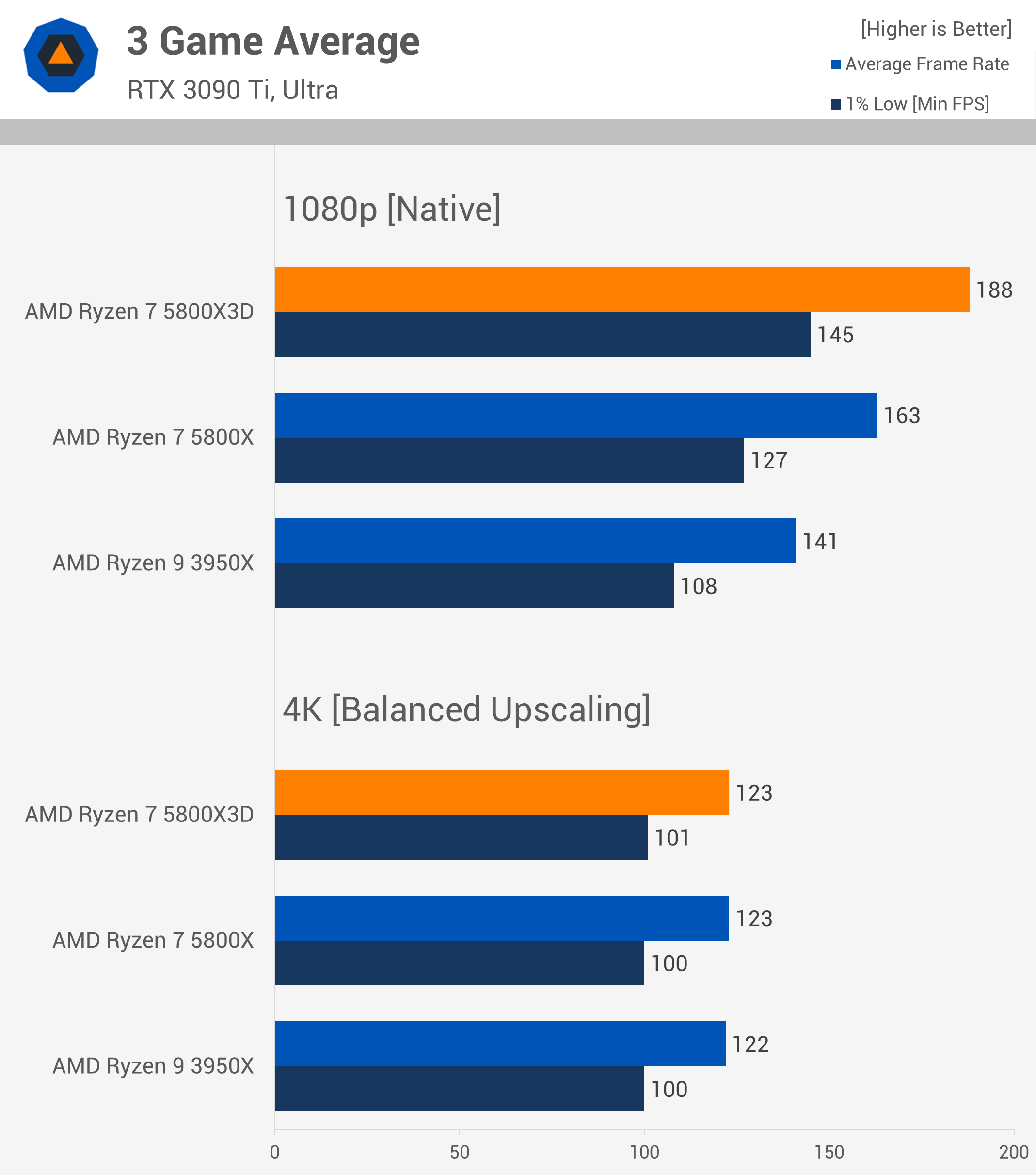

3 Game Average (2022 Titles)

Across just these three games from 2022 or earlier, we found that the 5800X3D was, on average, 15% faster than the 5800X and 33% faster than the 3950X at 1080p, with an RTX 3090 Ti. However, if we focused solely on the 4K upscaling results, we’d see all three processors delivering identical performance. For those opposed to low-resolution testing, this could be misleading and lead to undesirable outcomes.

For example, a novice PC builder might opt for the 3950X because it has twice as many cores and might seem like it would age better. Essentially, without low-resolution testing, you’re going in blind and may make incorrect assumptions. To illustrate how badly this decision could age, let’s examine how these CPUs perform in more modern games with even more powerful gaming hardware.

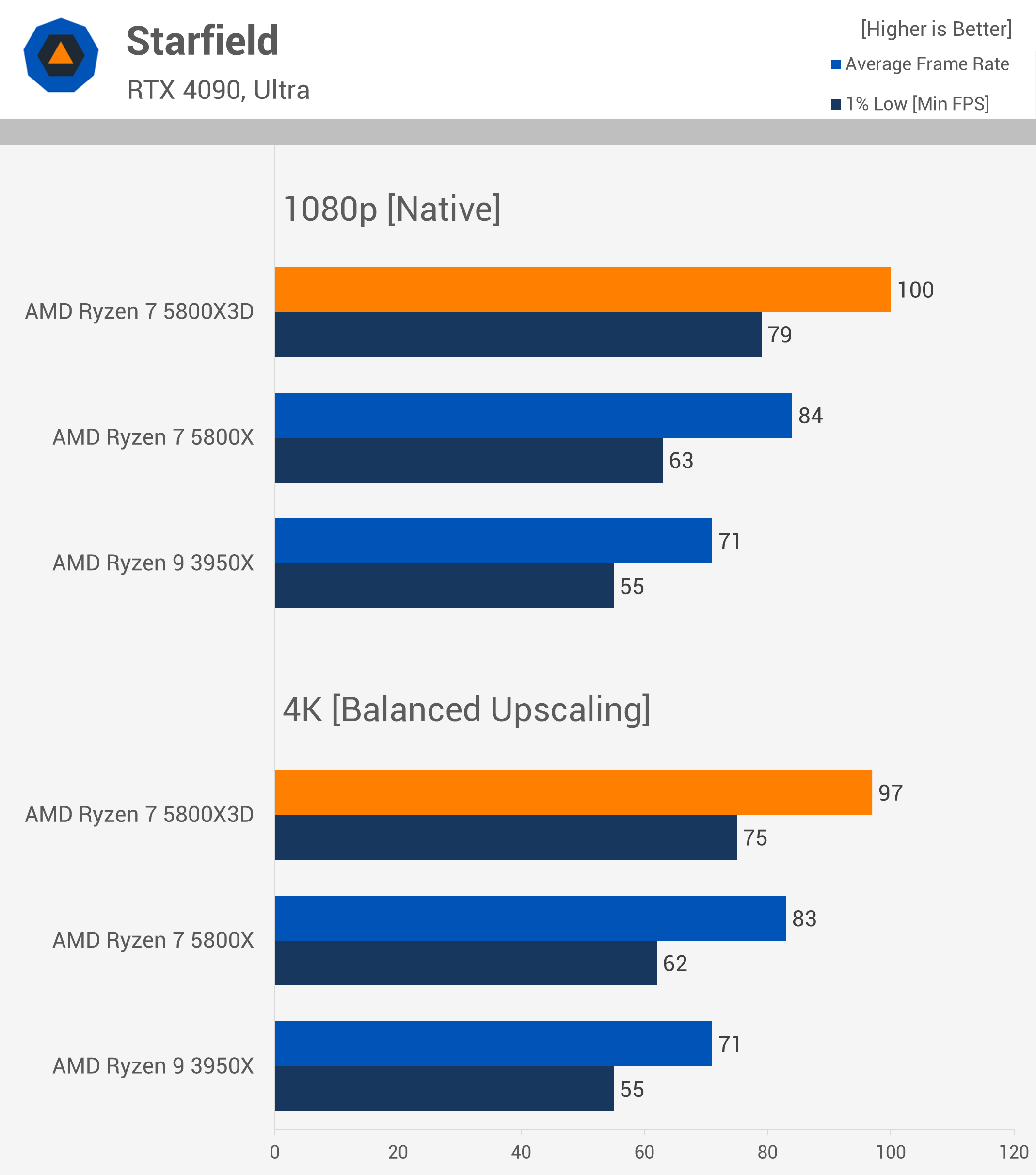

Starfield

Starfield, released in late 2023, is known to be very CPU-limited in sections with many NPCs. Testing at 1080p or 4K with upscaling, we see results that sharply contrast with the 2022 sample. The 5800X and 3950X create a 100% CPU bottleneck, and the same is largely true for the 5800X3D. As a result, the 5800X3D was 17% faster than the 5800X at 4K and 36% faster than the 3950X – margins consistent with our previous averages at 1080p.

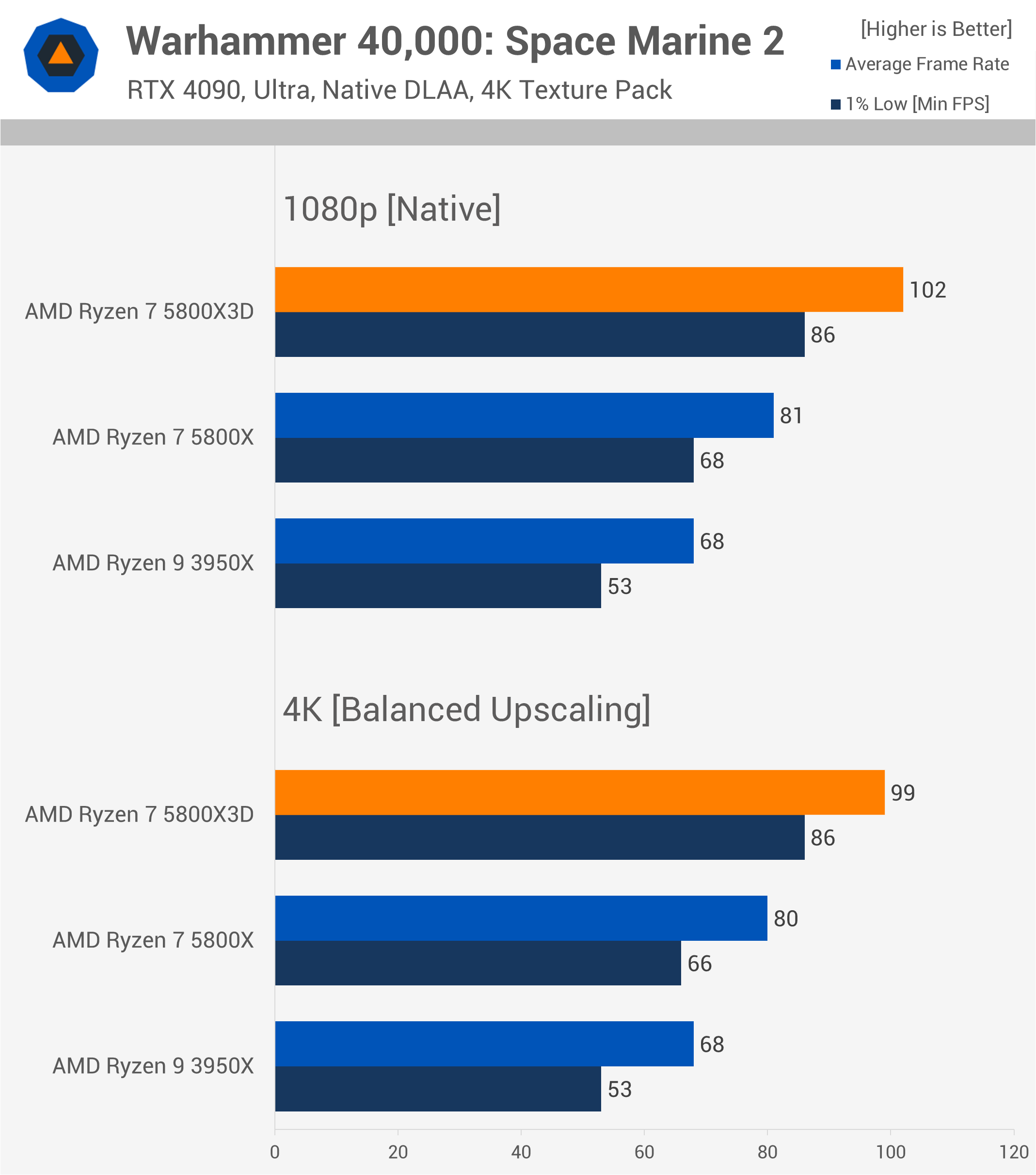

Warhammer 40,000: Space Marine 2

We see similar results with Space Marine 2: at 4K, the 5800X3D is 24% faster than the 5800X and an impressive 46% faster than the 3950X, resulting in a completely different gaming experience when comparing these CPUs in a modern title at 4K with upscaling.

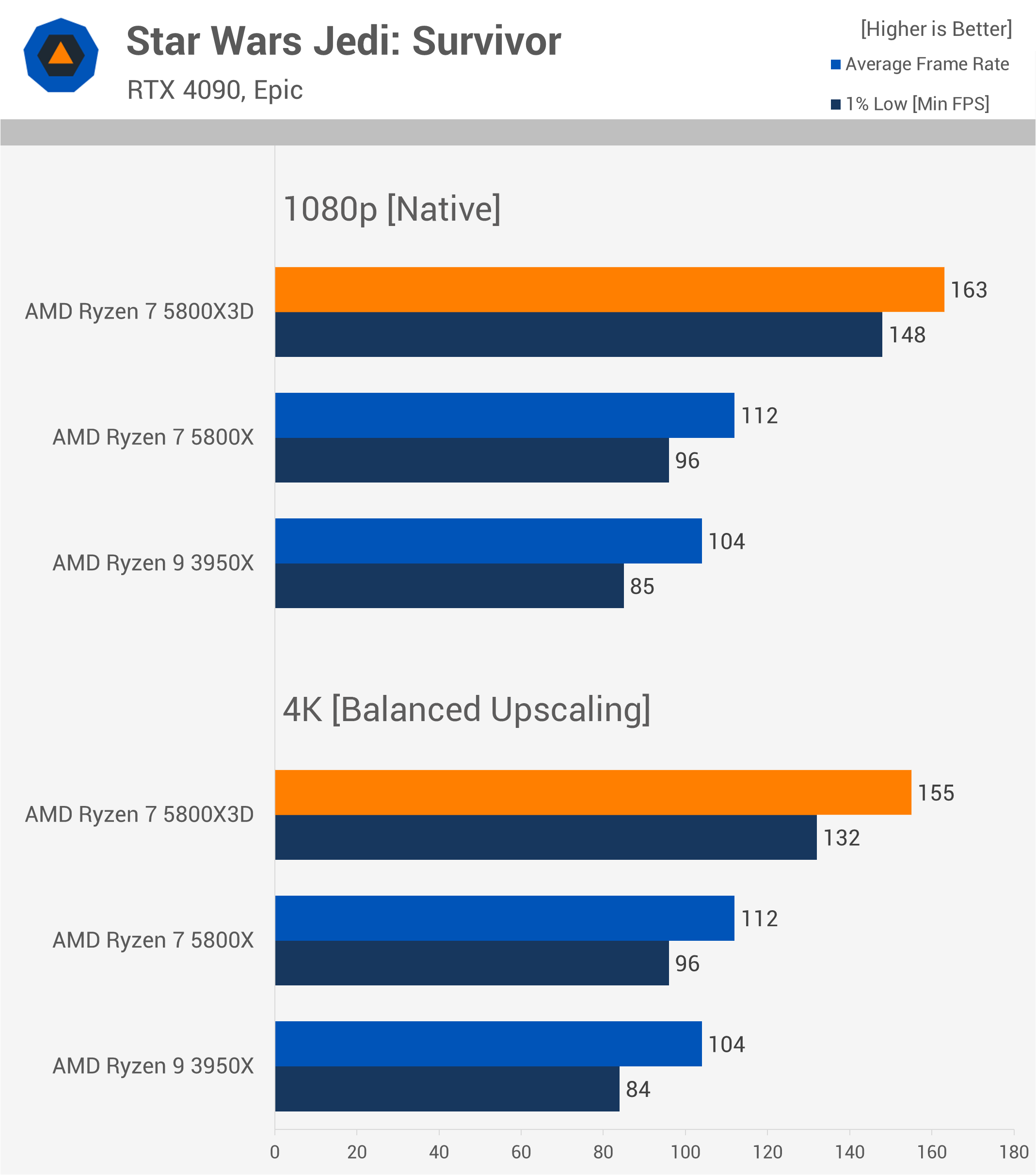

Star Wars Jedi: Survivor

In Star Wars Jedi: Survivor, another CPU-demanding game, the 3950X and 5800X still perform reasonably well, but the 5800X3D pulls ahead, delivering 38% more performance than the 5800X at 4K and 49% more than the 3950X. If you’re aiming for a high-refresh rate experience in this title, the 5800X3D is the better choice, whereas the 5800X or 3950X may struggle to keep up.

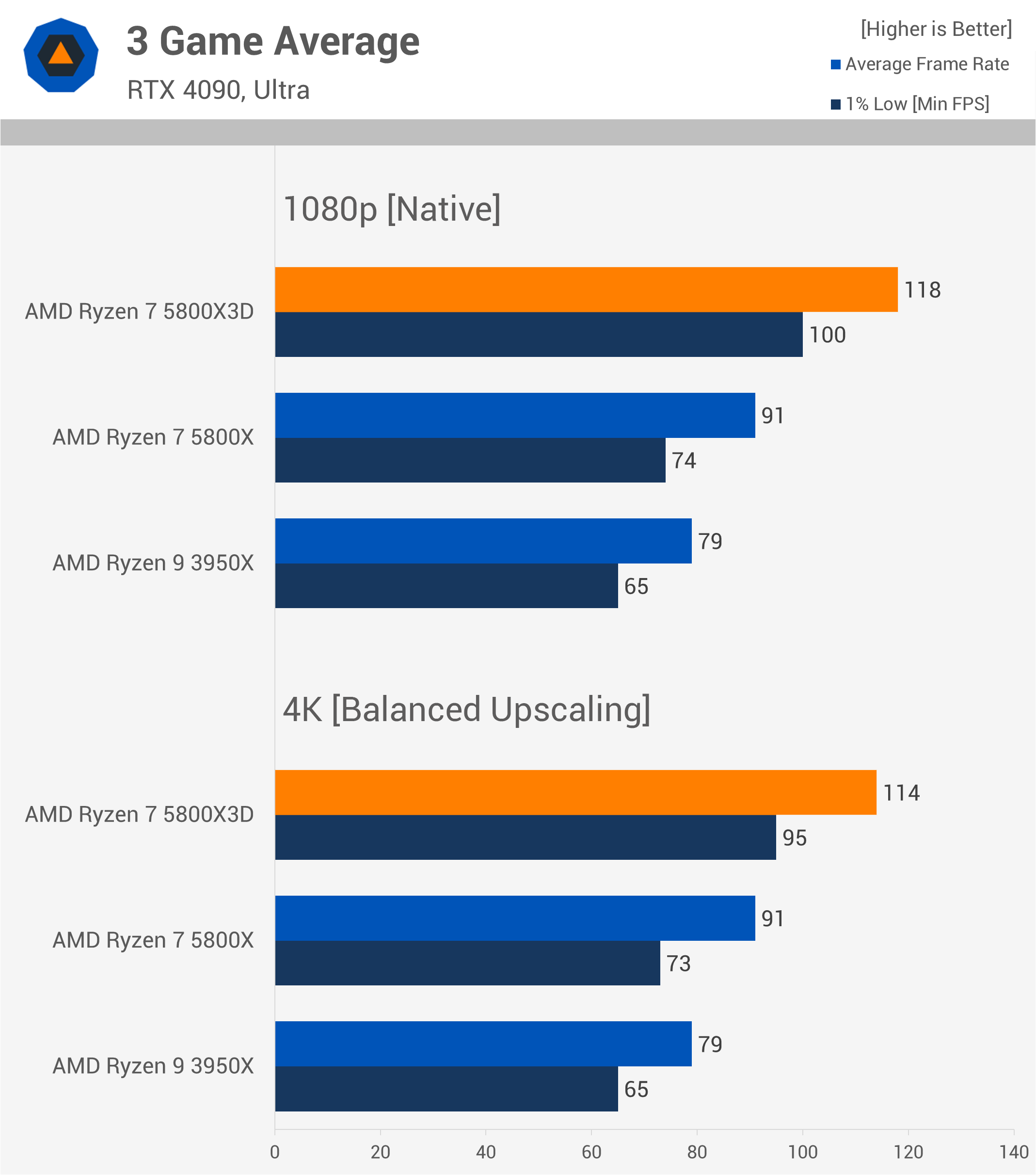

3 Game Average (2023-24 Titles)

This updated average graph shows a noticeable shift. Although this data is based on a small sample of CPU-demanding games, the results indicate significant differences.

At 4K, the 5800X3D was, on average, 25% faster than the 5800X and 44% faster than the 3950X – substantial margins. While we’re testing with an RTX 4090, you’d likely see similar margins with an RTX 4070 Super if you dialed down the quality settings a bit.

This highlights how low-resolution testing accurately reveals CPU gaming performance and serves as a strong predictor of future gaming performance, as clearly demonstrated here.

What We Learned

Hopefully, by this point, you understand why testing CPU performance with a strong GPU bottleneck is a flawed approach and significantly more misleading than the well-established testing methods used by nearly all tech media outlets.

We get why some readers prefer what’s often referred to as “real-world” testing, but in the context of CPU benchmarks, it doesn’t actually provide the insight you might think – unless you plan on using that exact hardware combo under those specific conditions.

Moreover, CPU reviews aren’t meant to push you into upgrading your CPU or upsell you on a more expensive one. The purpose of low-resolution testing is to inform you of the real performance and value the CPU offers in today’s games, especially in the most demanding sections, and often gives a glimpse of how things might look in the future.

There are also aspects you need to work out on your end – whether you actually need a CPU upgrade and, if so, what that upgrade should look like. As we’ve said multiple times, if you’re more of a casual gamer, mainly playing single-player games at around 60 fps, chances are you’re not CPU-limited, unless you have a very old CPU. If that’s the case, you’re probably interested in a more cost-effective upgrade, like a Ryzen 5 7600 or Ryzen 7 7700X.

Looking at the average frame rate performance from our recent day-one CPU review data, we see that the 7600X achieved an average of 166 fps, while the slowest CPU tested, the Core i5-12600K, was good for 139 fps. Given this, it’s unlikely that any modern CPU wouldn’t be able to deliver the level of performance you find acceptable in the latest games. We even saw the older Ryzen 9 3950X average just under 80 fps in the three demanding modern titles we tested.

So, step one is to determine if your system’s gaming performance is CPU-limited. If it is, then decide on your budget for a CPU upgrade. You can check out any of our cost-per-frame data graphs to see which options offer the best value.

Alternatively, if you’re after high-end performance and want the best of the best, you don’t need to look at cost-per-frame data – just go for the fastest gaming CPU, which is currently the 9800X3D. Low-resolution benchmarks confirm this, and we’re confident future titles will show similar results to what we saw when comparing the Ryzen 3000 and 5000 series processors.

Anyway, we look forward to all the negative comments about 1080p benchmarks in future CPU reviews – it’s easy engagement after all. Until then, if you enjoyed our work on this article, share it, subscribe to our newsletter for content updates, and check out our TechSpot Elite subscription option to remove ads and receive more perks.